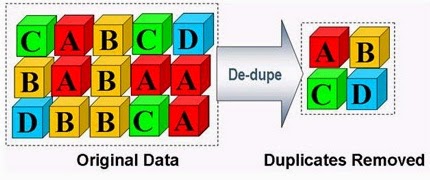

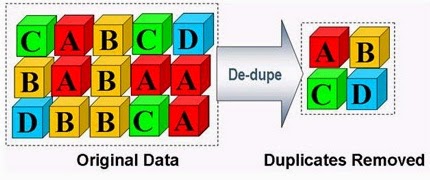

What is it?

Deduplication is the process of eliminating duplicate copies of data. Dedup is generally either file-level, block-level, or byte-level. Chunks of data — files, blocks, or byte ranges — are checksummed using some hash function that uniquely identifies data with very high probability. When using a secure hash like SHA256, the probability of a hash collision is about 2^-256 = 10^-77 or, in more familiar notation, 0.00000000000000000000000000000000000000000000000000000000000000000000000000001. For reference, this is 50 orders of magnitude less likely than an undetected, uncorrected ECC memory error on the most reliable hardware you can buy.

Installing zfs on Ubuntu server 12.04

sudo apt-get -y install python-software-properties

sudo add-apt-repository ppa:zfs-native/stable

sudo apt-get update

sudo apt-cache search zfs

sudo apt-get install ubuntu-zfs

Run the zfs commands to make sure it works

sudo zfs

sudo zpool

There is no extra configuration for ZFS running on Ubuntu

zpool command configure zfs storage pools

zfs command configure zfs filesystem

zpool list shows the total bytes of storage available in the pool.

zfs list shows the total bytes of storage available to the filesystem, after

redundancy is taken into account.

du shows the total bytes of storage used by a directory, after compression

and dedupe is taken into account.

“ls -l” shows the total bytes of storage currently used to store a file,

after compression, dedupe, thin-provisioning, sparseness, etc.

Link for reference:

https://blogs.oracle.com/bonwick/entry/zfs_dedup